Emulating PS2 Floating-Point Numbers: IEEE 754 Differences (Part 1)

So you want to emulator PS2 floating-point numbers? Be warned, the PS2 doesn't follow the IEEE 754 specifications. Let's dive into how to make it happen!

Table of Contents

Terminology

- IEEE 754 - A technical standard for floating-point numbers established in 1985 by the Institute of Electrical and Electronics Engineers (IEEE). Most modern computer hardware follows this standard.

- SoftFloat - A software implementation of floating-point arithmetic.

- PS2 - Shorted name for the PlayStation 2 console.

Introduction

This is the start of a series about emulating PS2 floating-point numbers. Emulating PS2 floating-point numbers is a bit of a pain because it does not follow the IEEE 754 standard like most modern computers. The PS2 uses its own variation of the IEEE 754 standard I like to call either the PS2 IEEE 754 variant, PS2 floating-point variant, or PS2 float.

Floating-point operations are usually preformed by the hardware of the host machine which usually follows the IEEE 754 standard. That means your phone, pc, or your Mom's old laptop all most likely follow the IEEE 754 standard.

Unfortunately, due to the PS2's IEEE 754 standard deviation, we can't use the standard floating-point operations when programming. Instead, we have to design a custom implementation to emulate PS2 floating-point operations by software. This is called a "SoftFloat" or "Software Floating-Point".

Problems With Emulating Floating-Point

SoftFloat implementation most of the time are MASSIVELY slower than hardware no matter how its designed. Instead of a computer chip directly computing the floating-point, we have to programatically calculate and call functions to get the same results.

Then we have the issue of debugging. Our soft-float implementation is not compliant with the IEEE 754 standard and we have to use the PS2 as a baseline to verify results. If we have an issue, we have to investigate whether its a bug or an undocumented quirk in the PS2 IEEE 754 variant.

Floating-Point Format

I will quickly explain the floating-point number format; be wary that some details will be missing. If you want to learn more about floating-point numbers, I recommend checking out the IEEE 754 Wikipedia, References, or searching the web.

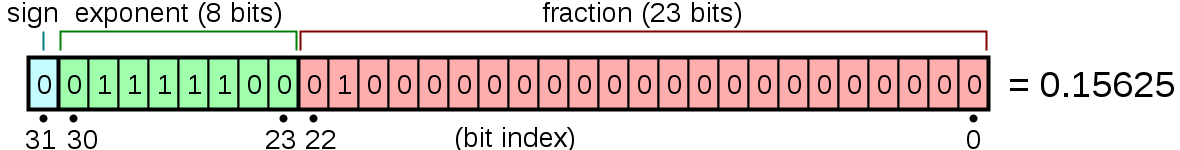

A 32-bit register stores a floating-point number with the following structure

Bits 22-0 are called the fraction bits, mantissa, or significand. I will be using the term mantissa.

1 Name Bits 2 ------ ------ 3 Sign 31 4 Exponent 30-23 5 Mantissa 22-0

The Sign Bit

Bit 31 is the sign bit. A 0 denotes a positive number, and a 1 represents a negative number. Flipping this value flips the sign.

The Exponent

The exponent field is between bits [30-23] and needs to represent both positive and negative exponents. Because the field is an unsigned integer (always positive), the value stored in the exponent field is offset from the actual value by an exponent bias. To get the actual exponent value, subtract the exponent bias from the value stored in the exponent field. For IEEE 754 single-precision floats, this value is 127, that means the exponent field can represent values from -127 to 128.

1| Exponent Field Value | Actual Exponent Value | 2------------------------------------------------ 3| 0 | -127 | 4| 127 | 0 | 5| 200 | 73 |

For example, to express an exponent of zero, store 127 in the exponent field.

Similarly, an exponent value of 200 resolves to 73 or 200 - 127 = 73.

The field is a base 2 number but not stored in base 2 form.

When converting a floating-point decimal into a float, the exponent is expressed in base 2 form using 2^(exponent - 127).

The Mantissa

Bits [22-0] make up the mantissa or fraction, which is the precision of the number to the right of the decimal points. Even though the mantissa is 23 bits long, there is a leading hidden bit called the "implicit leading bit" (left of the decimal point) which is not stored in the floating-point number which actually makes it 24 bits long. When the mantissa is extracted and used for arithmetic, you add the implicit bit by setting bit 23 of the standalone mantissa.

When the mantissa is stored back into the floating-point number, you remove bit 23 to delete the implicit bit. The implicit bit is always assumed to be 1. If you read IEEE 754 docs and see the mantissa referred to as 1.mantissa, the 1. is the implicit bit.

Floating-point numbers are typically normalized, meaning there is a non-zero bit to the left of the radix point (decimal point) or the mantissa is zero. Technically speaking, when we perform arithmetic operations on the mantissa and the mantissa is greater than zero, the first set bit if the mantissa MUST BE BIT 23 (the implicit leading bit).

If the exponent field is greater than zero, the implicit leading bit is assumed to be 1. This means that the mantissa is actually 24 bits with an invisible leading bit of 1, and space is saved by not storing it.

If the exponent field is zero, then the implicit leading bit is assumed to be 0 as well. This is called a denormalized number.

The PS2 doesn't support denormalized numbers and during arithmetic operations, truncates any denormalized numbers to zero.

Putting It All Together

In summary:

- The sign bit is 0 for positive numbers and 1 for negative numbers.

- The exponent field contains 127 plus the real exponent for single-precision.

- The exponent field is base 2.

- The first bit of the mantissa is typically assumed to be 1 and represented in docs as

1.mantissa.

Representing in Code

Floating-point numbers as a struct:

1struct Float { 2 sign: bool, 3 exponent: u8, 4 mantissa: u32, 5}

Converting into a decimal:

1impl Float { 2 /// Convert the struct into a decimal 3 fn to_decimal(&self) -> u32 { 4 let mut result = 0u32; 5 result |= (self.sign as u32) << 31; 6 result |= (self.exponent as u32) << 23; 7 result |= self.mantissa; 8 result 9 } 10}

Converting Into a Float

Since PS2 floats don't follow the IEEE 754 standard, we will represent them as structs or in decimal form. Once in decimal form, its useful to convert the decimal into a regular 64-bit float data type or f64 to see its string representation. Even though a PS2 float is 32 bits, we don't use f32 because there are cases where a PS2 float in decimal form would result in "NaN" (not a number), "Inf" (infinity), or a bigger number that whats allowed in the IEEE 754 standard.

A 64-bit float or f64 is the only type that can represent these values since it has a larger range than f32 as it follows the IEEE 754-1985 which allows a bigger range of numbers that luckily covers abnormal PS2 float cases.

Here is the algorithm for converting a decimal into a float:

1 num = (si ? -1 : 1) * 2 ^ (exponent - 127) * (1.mantissa)

or

1 float num = (sign ? -1 : 1) * Math.pow(2, exponent - 127) * (mantissa / Math.pow(2, 23) + 1)

expanded:

1/// Convert a decimal into a floating-point number using the IEEE 754 standard. 2fn as_f64(f: Float) -> f64 { 3 // Get real exponent value by subtracting bias 4 let mut exponent = f.exponent - 127; 5 6 // Raise exponent by the power of 2 7 exponent = 2.powf(exponent); 8 9 // Get mantissa and put it behind the decimal point 10 let mut mantisa = f.mantissa / 2.powf(23); 11 12 // Add implicit leading bit 13 mantisa = mantisa + 1; 14 15 // Multiply mantissa by exponent 16 let mut result = mantisa * exponent; 17 18 // If sign bit is set, make result negative 19 if f.sign { 20 result = result * -1; 21 } 22 23 return result; 24} 25 26 27fn main() { 28 // 3.75 29 let float_struct = Float { 30 sign: false, 31 exponent: 0x80, 32 mantissa: 0x700000, 33 }; 34 35 // 3.75000000000000000000000000000000 36 println!("Float representation: {:.32}", float_struct.as_f64()); 37 38 // 4.3 39 let float_struct_2 = Float::new(0x4089999A); 40 41 // 4.30000000000000000000000000000000 42 println!("Float representation: {:.32}", float_struct_2.as_f64()); 43}

Differences from IEEE 754 Standard

NaN Does Not Exist

In the IEEE 754 standard, NaNs represent undefined or unrepresentable values, such as the result of a zero divided by zero. A NaN is encoded by filling the exponent field with ones, and some non-zero value in the mantissa. This allows the definition of multiple NaN values, depending on what is stored in the mantissa field.

An example of NaN encoding:

1 |31|30---------23|22-------------------------------------------0| 2 |s | 11111111 | xxx xxxx xxxx xxxx xxxx xxxx | 3 -----------------------------------------------------------------

The sign bit is ignored, the exponent field is filled with ones, and the mantissa can be anything except zero because a mantissa of zero would represent infinity.

When NaN are encountered on the PS2, they are treated as normal values, which means the PS2 can represent values greater than what the IEEE 754 standard allows.

If the mantissa of a NaN is filled with all one's, the PS2 treats these NaNs as either the maximum possible value Fmax / MAX or the minimum possible value -Fmax / -MAX depending on the sign bit.

Fmax / MAX or 0x7FFFFFFF:

1 |31|30---------23|22-------------------------------------------0| 2 | 0| 11111111 | 111 1111 1111 1111 1111 1111 | 3 -----------------------------------------------------------------

-Fmax / -MAX or 0xFFFFFFFF:

1 |31|30---------23|22-------------------------------------------0| 2 | 1| 11111111 | 111 1111 1111 1111 1111 1111 | 3 -----------------------------------------------------------------

When a PS2 float with the above NaN encoding is converted into a 32-bit float, we will get "NaN". This is one of the limitations we talk about avoiding in Converting Into a Float when we want to print what the PS2 floating-point number represents. Luckily, a 64-bit float allows for a greater range which allows us to print what the PS2 floating-point number represents.

Infinity Does Not Exist

In the IEEE 754 standard, infinity represents values that are too large or small to be represented. This is done by setting all the exponent bits to 1 and setting the mantissa to 0. The sign bit can be either 0 or 1, with 0 representing positive infinity, and 1 representing negative infinity.

Positive infinity encoding:

1 |31|30---------23|22-------------------------------------------0| 2 | 0| 11111111 | 000 0000 0000 0000 0000 0000 | 3 -----------------------------------------------------------------

Negative infinity encoding:

1 |31|30---------23|22-------------------------------------------0| 2 | 1| 11111111 | 000 0000 0000 0000 0000 0000 | 3 -----------------------------------------------------------------

When infinity is encountered on the PS2, they are treated as normal values, which means the PS2 can represent values greater than what the IEEE 754 standard allows.

If we tried to convert a PS2 float with the above NaN encoding into a 32-bit float, we will get "+Inf" or "-Inf". This is one of the limitations talk about avoiding in Converting Into a Float when we want to debug what the PS2 floating-point number represents. Luckily, a 64-bit float allows for a greater range which allows us to print what the PS2 floating-point number represents.

Denormalized Numbers Do Not Exist

Numbers with the exponent field set to 0 are denormalized numbers regardless of the sign or mantissa.

Denormalized number encoding:

1 |31|30---------23|22-------------------------------------------0| 2 |s | 00000000 | xxx xxxx xxxx xxxx xxxx xxxx | 3 -----------------------------------------------------------------

Denormalized numbers do not exist on the PS2 and during arithmetic operations, the PS2 truncates denormalized numbers to zero.

Rounding Mode Is Always Rounding to Zero

The IEEE 754 standard supports multiple rounding modes: "Rounding to Nearest", "Round to Zero", and "Round to Infinity". The PS2 only supports "Rounding to Zero". Also, since "Rounding to Zero" does not require full value before rounding, unlike the IEEE 754 standard, the results may differ from the IEEE 754 "Rounding to zero". This difference is usually restricted to the least significant bit only but the details of this are not entirely known.

Floating-point operations need to be rounded because the concept of floating-point is trying to represent infinite numbers with a finite amount of memory. When performing arithmetic operations on floating-point numbers, the result is rounded to fit into its finite representation. This can introduce rounding errors and is a limitation of floating-point numbers.

Some rounding-errors examples in standard IEEE 754:

1 0.1 + 0.2 == 0.30000000000000004

1 4.9 - 4.845 == 0.055 == false

1 4.9 - 4.845 == 0.055000000000000604 == true

1 0.1 + 0.2 + 0.3 == 0.6 == false

1 0.1 + 0.2 == 0.60000002384185791015625000000000 == true

"Rounding to Zero" or truncate means discard the mantissa and keep what's to the left of the decimal point.

Examples but with rounded to zero applied:

1 round_to_zero(0.1 + 0.2) == 0

1 round_to_zero(4.9 - 4.845) == 0

1 round_to_zero(4.9 - 2.7) == 2.0

1 round_to_zero(9.4 - 3.2) == 6.0

Final Thoughts

- PS2 floating-point implementation deviates from the official IEEE 754 specifications.

- One solution is emulating floating-point operations using software which is super slow.

- PS2 floating-point implementation lack NaN, Infinity, and demoralized numbers.

- PS2 floats always round to zero.

In the next article we will learn how to preform arithmetic using PS2 floats in part 2.

About the Author 👨🏾💻

I'm Gregory Gaines, a simple software engineer at Google that writes whatever's on his mind. If you want more content, follow me on Twitter at @GregoryAGaines.

If you have any questions, hit me up on Twitter (@GregoryAGaines); we can talk about it.

Thanks for reading!

References

- PS2Tek

- IEEE 754 Wikipedia

- IEEE 754 Floating Point Converter

- Single-precision floating-point format

- NaN Wikipedia

- IEEE Standard 754 Floating Point Numbers

- IEEE 754

- Rounding Wikipedia

- round towards zero in java

- Floating-point arithmetic Wikipedia

- Round-off Errors

- Floating point numbers and rounding errors—a crash course in Python

- What causes floating point rounding errors?

- What is a simple example of floating point/rounding error?

- What Every Computer Scientist Should Know About Floating-Point Arithmetic

- Floating Point/Normalization

Buy Me a Coffee

Buy Me a Coffee